Should You Retrain That Model? (Part 3)

Best Practices for Efficient and Reliable Retraining

In Part 1, we explored the challenges of model degradation, including how it often goes unnoticed and why teams hesitate to retrain due to factors like shifting priorities. In Part 2, we discussed reframing the retraining decision as a practical tradeoff, balancing the benefits of updating now versus later, while highlighting how reducing operational overhead can make retraining a more straightforward process. In this final part, we'll share some tactical approaches that can help achieve that. These insights are based on our experiences at TrueTheta and observations from various ML teams we've collaborated with.

Our aim here is to promote reproducibility and simplicity, potentially turning retraining into a more routine and low-effort activity that's easy to track and manage. We'll touch on ways to enhance pipeline standardization, thoughtful investments in monitoring, strategies for managing complexity, and some self-assessment questions to evaluate your current setup.

Building Toward Reproducibility: A Key Enabler for Easier Retraining

Reproducibility is an essential and undervalued tenet of effective machine learning engineering, largely due to its easing of model retraining. When you can reliably recreate the training environment and data, initiating a refresh might become as straightforward as triggering a pipeline.

Tools like Git can handle code versioning, while DVC (Data Version Control) for datasets can help treat inputs similarly to source code, ensuring consistency through hashing. While this might involve some additional storage considerations for deduplication, teams we've worked with have found it worthwhile for achieving a streamlined "one-click" retrain process, where ETL, training, and evaluation run automatically. For prototyping, notebooks can be great, but for production, modular scripts or workflow templates, such as those from Cookiecutter Data Science, might offer more consistency.

It can also be helpful to standardize the computing environment. Options like Docker containers combined with dependency managers such as uv or Poetry can ensure consistent libraries and versions across runs. Establishing a single, audited source for data, like specific tables in your warehouse, may reduce the risk of duplicates and inconsistencies. For features, feature stores like Feast, Chalk, or Tecton provide a way to define them once (and if you find yourself defining features more than once, it could be a sign of inefficiency) and maintain training-serving alignment, potentially avoiding common issues where models perform differently in production.

Additionally, automating testing and evaluation through CI/CD pipelines (e.g., GitHub Actions or Jenkins) generate useful artifacts like offline metrics, confusion matrices, feature importances, and even setups for A/B testing in shadow mode. The idea is to make retraining easy, reliable and consistent, rather than manual, risky and unpredictable.

Thoughtful Monitoring and Evaluation: Balancing Automation with Human Insight

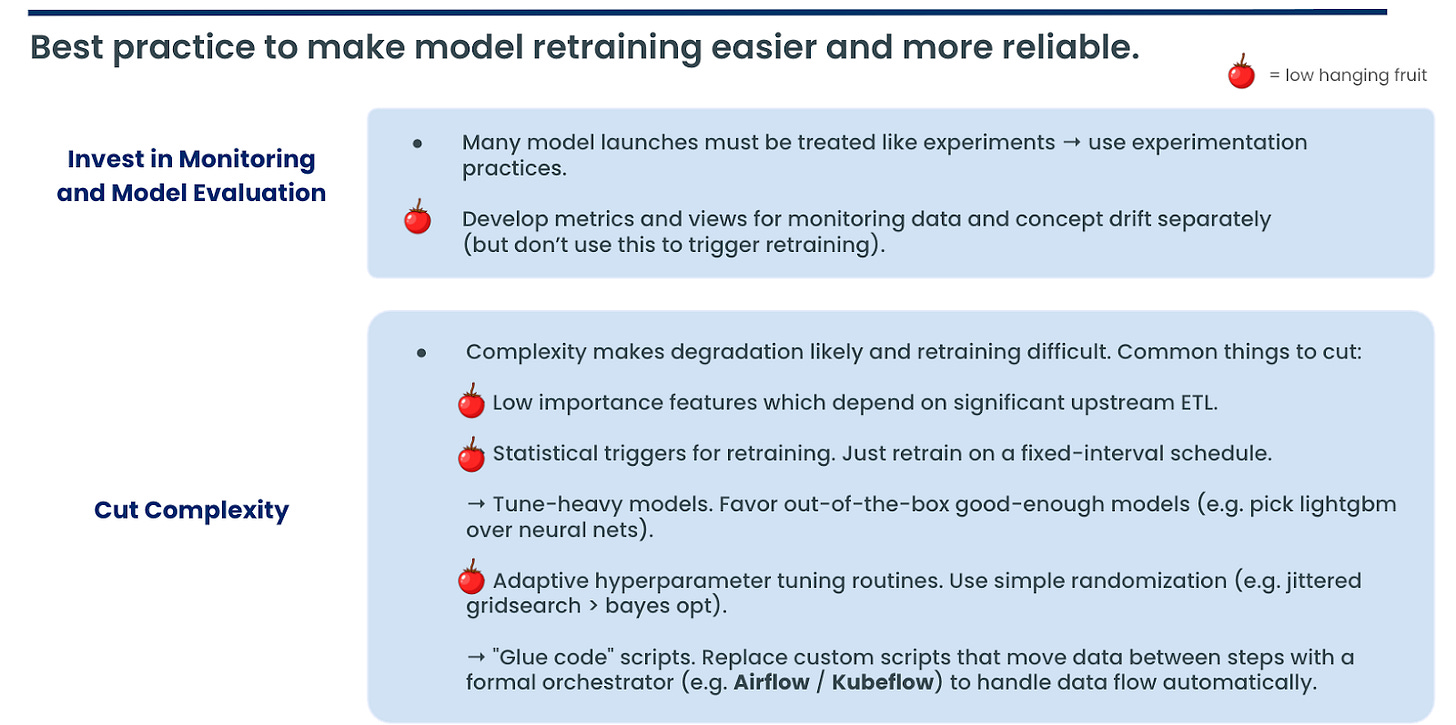

Monitoring plays an important role, but as we noted in Part 1, it might be most effective as a tool to support decision-making rather than as an automatic trigger for action.

You might find it useful to create distinct views for data drift (changes in P(X)) and concept drift (changes in P(Y|X)). For data drift, visualizing feature distributions over time, such as through histograms, can provide more context than relying solely on scalar metrics like KL divergence or KS tests, which sometimes flag non-critical shifts. For concept drift, tracking residuals between predictions and labels (where available, even if delayed) or simulating minor input perturbations in a staging environment could offer insights into model resilience. However, these are best used to understand the model's context, rather than determining the moment of retraining, since they are incomplete for that purpose.

Drawing from experimentation practices, techniques like A/B tests, shadow deployments, and holdout sets can be adapted for model updates. While univariate statistical thresholds may seem appealing and easy to implement, they can often miss the high-dimensional shifts in real-world data. Instead, the most effective strategy we've observed is to make retraining as easy and inexpensive as possible, allowing teams to perform it routinely, such as on a fixed schedule, without relying heavily on imperfect drift detection to trigger the process. This approach minimizes the need for complex decisions, with human oversight ensuring overall quality and relevance.

In practice, treating monitoring as a regular dashboard review can help spot issues early, like unexpected upstream changes in feature scales, while also serving broader purposes beyond just deciding when to retrain. For instance, it can build general knowledge of the model's environment, revealing patterns or shifts that inform day-to-day operations in unexpected ways. It also supports debugging by highlighting errors or anomalies that require fixes rather than a full retrain. Additionally, reviewing these insights often sparks ideas for model improvements, such as new features or architectural changes that go beyond simple retraining. For larger-scale models (e.g., in recommendation systems), it's worth considering the ongoing maintenance needs upfront. While powerful, they can require more resources; simpler alternatives like LightGBM might retrain more quickly and with less oversight, depending on your specific ROI calculations.

Managing Complexity: Streamlining for Sustainability

Reducing complexity can make retraining more manageable over time, as it may help minimize bugs, speed up processes, and make issues easier to identify. Here are some approaches to consider for creating a more maintainable setup.

With features, evaluating and potentially removing those with lower importance, especially if they depend on complex upstream ETL, could reduce potential points of failure. From both a modeling and operational perspective, a leaner feature set might enhance robustness and efficiency. Teams we've advised have sometimes seen benefits in stability and performance after such pruning.

Regarding triggers for retraining, a fixed-interval schedule (e.g., monthly) might be a practical alternative to statistical alarms, which can add unnecessary infrastructure. Routine retrains, enabled by lower costs, are a reliable, feasible way to capture nearly all the value of optimal model retraining.

When selecting models, simpler options like tree-based methods might offer good extrapolation and lighter tuning needs compared to more complex neural networks, which can demand more maintenance. Tree-based models are standard for noisy, market data with heterogeneous features, as they require modest tuning and often work well out of the box. For hyperparameter tuning, straightforward methods like random or grid search could maintain reproducibility while being efficient, potentially avoiding the added layers of adaptive optimization. Model tuning, where each iteration is a full model training procedure, is a slow, complexity-inducing process that is important to anticipate and manage.

Lastly, consolidating ad-hoc scripts into a structured orchestrator, such as Airflow or Kubeflow, might improve visibility and auditability, helping to resolve common data lineage questions during retrains.

Self-Assessment: Gauging Your Retraining Maturity

Here are some questions we've found helpful for teams to reflect on their processes.

1. Can the model developer initiate a retrain in under 10 minutes, perhaps with a simple pipeline trigger and minimal manual steps?

2. Would another team member be able to handle it easily? This can highlight the strength of your documentation and knowledge sharing.

3. Does the process produce sufficient artifacts, like metrics, visuals, and comparisons, to support a confident decision on whether to deploy the updated model?

4. Is deployment streamlined, such as through a PR approval where automation handles evaluations and a human provides final review? This can foster accountability, especially in regulated environments, using tools like GitHub Actions to populate details automatically.

If these align with your setup, it could indicate a solid foundation for routine retraining.

Encouraging Retraining as a Standard Practice

Retraining can become a natural part of maintaining model effectiveness and supporting business goals. By focusing on reproducibility, strategic monitoring, complexity management, and regular self-checks, teams might shift toward a more proactive stance where updates feel routine rather than exceptional. At TrueTheta, we've supported organizations in adopting similar patterns to enhance their model lifecycle. If this sparks ideas or if you'd like to explore presenting this framework to your team, feel free to reach out.

Thanks for following the series. We'd love to hear in the comments: What's one retraining challenge you've encountered, and how did you address it?

I'm curious if the same framework surrounding retraining is also true of video-based systems that train with licensed shows, movies. Do these degrade as well without retaining?